APST is introducing digital speech and facial expression analysis via the ALS app in a model project. The analysis of speech and facial expressions in amyotrophic lateral sclerosis (ALS) is useful because the speech and facial muscles are affected during the course of the disease.

The analysis of speech and facial expressions is of great interest for the treatment of ALS. Thus, in the future, analysis of speech and facial expressions could be used to assess the therapeutic efficacy of medications and other treatment procedures.

Since 1991, the ALS Self-Rating Scale (ALSFRS) has been the accepted tool for assessing speech impairments in ALS. However, the ALSFRS questionnaire is a relatively imprecise tool for detecting disease progression. Digital analysis of speech and facial expressions could provide an equivalent or even better method for detecting disease progression.

Introduction of a new technology

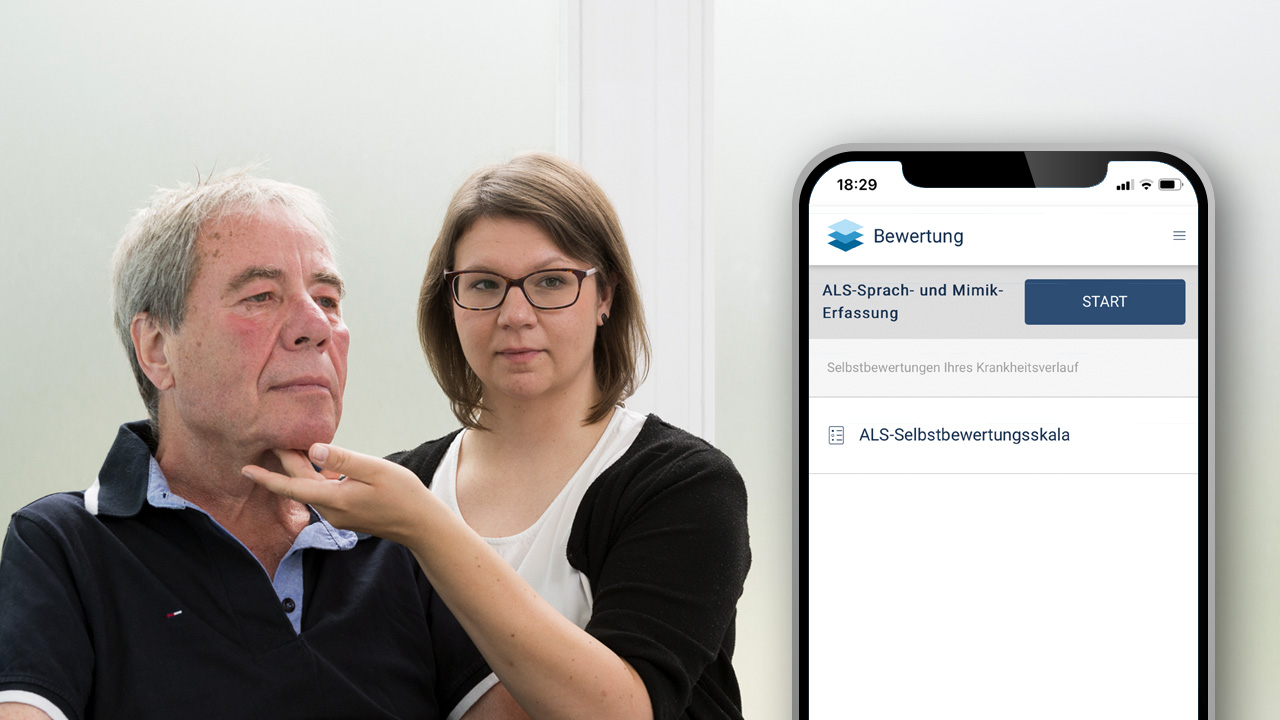

By integrating speech and facial expression analysis software into the ALS app, we hope to improve the detection of ALS progression. In a first step, we would like to establish the method of speech and facial expression analysis via the ALS app and learn how the new technique is used by ALS patients.

In a second step, we would like to apply the speech and facial expression analysis as a method comparable or potentially even superior to the ALSFRS questionnaire for recording the disease progression of ALS. We would like to address the following research questions with the software in the near future:

- Is there a correlation between changes in speech and facial expressions and the ALSFRS questionnaire? That is, can changes in speech and facial expression analysis also be detected with the ALSFRS questionnaire?

- Is there a correlation between speech and facial expression analysis and ALS therapy? That is, can therapy effects of new medications (e.g., Tofersen and study drugs) and other ALS therapies (e.g., high-caloric nutrition therapy or non-invasive/invasive ventilation) be detected with speech and facial expression analysis?

How does speech and facial expression capture work via the ALS app?

Speech and facial expression capture via the ALS app takes approximately 5 to 10 minutes per session. During this process, the analysis software provides a short, standardized program of questions and answers, voice and reading exercises, and free speech. As you speak, your smartphone's camera and microphone capture the movements of your facial muscles and your speech. The program compares the changes in speech and facial expressions between sessions. For each change, there is an assessment of how severe the impairment is.

Interested parties wanted for model project for speech and facial expression recording.

Currently we would like to establish the technical method in a model project with a group of 30 ALS patients. For this purpose, we are still looking for patients who are willing to use the ALS app with the speech and facial expression analysis via their smartphone. If you are interested in the project, please contact our app manager Friedrich Schaudinn by phone or email.

f.schaudinn@ambulanzpartner.de

Phone: 030 81031410

Mobil: 0170-5564502